How to Add Public Transport Widget into Eyeson Video Call

Displaying live public transit data in Eyeson calls can help participants coordinate meetups or travel plans. With this code example, we'll see how to fetch real-time transit data and display it in an Eyeson video meeting.

Important to Note

- This code example is only for demo purposes! Make sure to adjust it to your needs and handle error cases correctly!

- This demo uses the API of transitapp.com, which does not provide CORS allow headers. To make it work in the browser, you can use CORS-related add-ons or follow these instructions: https://medium.com/@beligh.hamdi/run-chrome-browser-without-cors-872747142c61

- The used API can be found here. The free tier allows you to have 5 calls per minute.

Fetching Transit Data

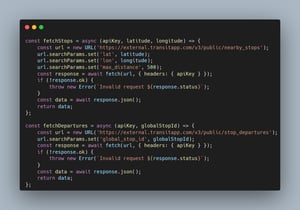

First, we must fetch the transit data from the Transit API. We'll be using two functions for this:

The fetchStops function gets the nearby stops for a given location. fetchDepartures then loops through each stop to fetch the departures for that stop ID.

Getting Location Coordinates

To query the proper location, we'll use the Geolocation API to get the user's current coordinates:

Formatting the Data

We format and combine the stops and departures data into a single object:

Drawing to a Canvas

Now we'll draw the transit data onto an HTML canvas to generate an image. The CanvasHelper class is used to measure text content in order to know the correct size of the background box.

Sending to Meeting

Finally, we send the generated image to the Eyeson meeting:

Launching the program

Now that all functions are in place, we can call them step by step. Please note that the transitApiKey needs to be a valid API key from transitapp.com and meetingAccessKey needs to be a valid token from an Eyeson meeting. Widescreen is an option when you start a meeting. The run function could be executed every minute to keep transit data up-to-date.

The Result

You can see the result of the working code below:

const run = async () => { const transitApiKey = '<YOUR TRANSIT API KEY>'; // https://transitapp.com/apis const meetingAccessKey = '<YOUR ACCESS KEY>'; const widescreen = true; const { latitude, longitude } = await getCurrentCoordinates(); const now = Math.trunc(Date.now() / 1000); // in seconds const transitData = await getTransitData(transitApiKey, latitude, longitude, now); const blob = await drawOnCanvas(transitData, now, widescreen); await sendLayer(blob, meetingAccessKey);};

Full code example

const fetchStops = async (apiKey, latitude, longitude) => {

const url = new URL('https://external.transitapp.com/v3/public/nearby_stops');

url.searchParams.set('lat', latitude);

url.searchParams.set('lon', longitude);

url.searchParams.set('max_distance', 500);

const response = await fetch(url, { headers: { apiKey } });

if (!response.ok) {

throw new Error(`Invalid request ${response.status}`);

}

const data = await response.json();

return data;

};

const fetchDepartures = async (apiKey, globalStopId) => {

const url = new URL('https://external.transitapp.com/v3/public/stop_departures');

url.searchParams.set('global_stop_id', globalStopId);

const response = await fetch(url, { headers: { apiKey } });

if (!response.ok) {

throw new Error(`Invalid request ${response.status}`);

}

const data = await response.json();

return data;

};

const getCurrentCoordinates = () => {

return new Promise((resolve, reject) => {

// https://developer.mozilla.org/en-US/docs/Web/API/Geolocation/getCurrentPosition

navigator.geolocation.getCurrentPosition(({ coords }) => {

resolve(coords);

}, error => {

reject(error);

}, {

enableHighAccuracy: true,

timeout: 5000,

maximumAge: 0,

});

});

};

const getTransitData = async (transitApiKey, latitude, longitude, now) => {

const maxTime = now + 15 * 60; // now + 15 minutes

const { stops } = await fetchStops(transitApiKey, latitude, longitude);

const stopsSortByDistance = stops

.filter(entry => entry.stop_name !== '') // stop name is required

.sort((a, b) => a.distance - b.distance) // sort by nearest distance

.slice(0, 4); // 5 calls/minute allowed, so fetch only first 4 stations

const promises = stopsSortByDistance.map(async stop => {

const { route_departures } = await fetchDepartures(transitApiKey, stop.global_stop_id);

const departures = [];

route_departures.forEach(route => {

const { route_text_color, route_color, route_type, route_short_name, itineraries } = route;

itineraries.forEach(itinerary => {

const { headsign, schedule_items } = itinerary;

schedule_items

.filter(({ departure_time }) => departure_time <= maxTime) // cap at max time (15 minutes)

.forEach(({ departure_time }) => {

// grab only neccessary data

departures.push({ route_text_color: `#${route_text_color}`, route_color: `#${route_color}`, route_type, route_short_name, headsign, departure_time });

});

});

})

stop.departures = departures;

});

await Promise.allSettled(promises);

const groupByStopName = {};

stopsSortByDistance.forEach(({ stop_name, departures }) => {

if (groupByStopName[stop_name] === undefined) {

groupByStopName[stop_name] = departures;

} else {

groupByStopName[stop_name].push(...departures); // merge all departures

}

});

Object.keys(groupByStopName).forEach(stop => {

groupByStopName[stop] = groupByStopName[stop].sort((a, b) => a.departure_time - b.departure_time); // sort merged departures by shortest time

});

return groupByStopName;

};

/**

* CanvasHelper

* Provides text size measurement and an elements stack

* Needed to measure content before actually drawing the background box

*/

class CanvasHelper {

constructor(context) {

this.stack = [];

this.context = context;

}

measureText(text, font) {

this.context.font = font;

return this.context.measureText(text).width;

}

addText(text, x, y, font, color) {

this.stack.push({ type: 'text', text, x, y, font, color });

return this.measureText(text, font);

}

addRect(x, y, width, height, color, radius) {

this.stack.push({ type: 'rect', x, y, width, height, color, radius });

return width;

}

draw() {

const { context } = this;

this.stack.forEach(({ type, text, img, x, y, font, width, height, color, radius }) => {

if (type === 'text') {

context.font = font;

context.fillStyle = color;

context.fillText(text, x, y);

}

else if (type === 'rect') {

context.fillStyle = color;

if (supportsRoundRect) {

context.beginPath();

context.roundRect(x, y, width, height, radius);

context.fill();

} else {

context.fillRect(x, y, width, height);

}

}

});

}

}

const supportsRoundRect = typeof CanvasRenderingContext2D.prototype.roundRect === 'function';

const drawOnCanvas = async (groupOfStops, now, widescreen) => {

const canvas = document.createElement('canvas');

canvas.width = 1280;

canvas.height = widescreen ? 720 : 960;

const context = canvas.getContext('2d');

const canvasHelper = new CanvasHelper(context);

const fontSize = 16;

const lineHeight = 24;

const rectHeight = 20;

const fontFamily = 'system-ui, sans-serif';

const fontColor = '#000';

let x = 40;

let y = 40;

let widest = 0;

let highest = 0;

const maxContentHeight = canvas.height - 40;

context.fillStyle = fontColor;

context.textAlign = 'left';

context.textBaseline = 'top';

Object.entries(groupOfStops).every(([stop, routes]) => {

x = 40;

canvasHelper.addText(stop, x, y, `bold ${fontSize}px ${fontFamily}`, fontColor);

y += lineHeight + 5;

routes.every(route => {

const { route_text_color, route_color, route_type, route_short_name, headsign, departure_time } = route;

const minutes = Math.floor((departure_time - now) / 60);

x = 50;

x += canvasHelper.addText(`${minutes}' `, x, y, `bold ${fontSize}px ${fontFamily}`, fontColor);

const routeNameWidth = canvasHelper.measureText(route_short_name, `bold ${fontSize}px ${fontFamily}`);

const rectWidth = canvasHelper.addRect(x, y - (rectHeight - fontSize) / 2, routeNameWidth + 14, rectHeight, route_color, rectHeight / 2);

canvasHelper.addText(route_short_name, x + 7, y, `bold ${fontSize}px ${fontFamily}`, route_text_color);

x += canvasHelper.addText(` ➞ ${headsign}`, x + rectWidth, y, `${fontSize}px ${fontFamily}`, fontColor) + rectWidth;

y += lineHeight;

if (x > widest) {

widest = x; // measure largest content width for background box

}

return y < maxContentHeight; // avoid overflow

});

highest = y; // measure largest content height for background box

y += 5;

return y < maxContentHeight; // avoid overflow

});

canvasHelper.addRect(20, 20, widest, highest, '#efefefcc', 20);

canvasHelper.stack.unshift(canvasHelper.stack.pop()); // move background box to the beginning of elements stack

canvasHelper.draw();

const blob = await new Promise(resolve => canvas.toBlob(blob => resolve(blob)));

return blob;

};

const sendLayer = async (blob, accessKey) => {

// https://docs.eyeson.com/docs/rest/references/layers

const formData = new FormData();

formData.set('file', blob, 'image.png');

formData.set('z-index', '1'); // foreground

const response = await fetch(`https://api.eyeson.team/rooms/${accessKey}/layers`, { method: 'POST', body: formData });

if (!response.ok) {

throw new Error(`${response.status} ${response.statusText}`);

}

};

const run = async () => {

const transitApiKey = '<YOUR TRANSIT API KEY>'; // https://transitapp.com/apis

const meetingAccessKey = '<YOUR ACCESS KEY>';

const widescreen = true;

const { latitude, longitude } = await getCurrentCoordinates();

const now = Math.trunc(Date.now() / 1000); // in seconds

const transitData = await getTransitData(transitApiKey, latitude, longitude, now);

const blob = await drawOnCanvas(transitData, now, widescreen);

await sendLayer(blob, meetingAccessKey);

};

Conclusion

Please bear in mind that we are a communication platform which lets you embed the public transport data into a call and have updated info in real time. This opens up many creative possibilities for integrating contextual live information into Eyeson meetings. Subscribe to our Tech Blog to read more interesting articles.